For more than six decades, many researchers and designers of computer programs have been inclined to make their computer interfaces appear intelligent and human-like. One of the assumptions behind this temptation is that since people already know how to interact with other people, then making a computer program act more like a person will improve the user experience. However, the experience of creating human-like computer programs together with research from the fields of design and computer science have presented challenges to this assumption.

Designing computer programs to look, sound, or behave more like humans is often talked about in terms of personification, humanization, or anthropomorphization. (These are separate concepts but they are often conflated.)There are many pitfalls associated with such approaches, but one of the most well-documented affects is that it causes users to expect that the computer program is “smarter” than it really is.

I have captured a few quotes and references from specialists in the field and in academia about this effect:

The Reprentation of Agents, Anthropomorphism, Agency, and Intelligence by William King and Jun Ohya presents data from one of their experiments which suggests:

Anthropomorphic [Human-like] …forms may be problematic since they may be inherently interpretted as having a high degree of agency and intelligence.

In the book Make It So, authors Chris Noessel and Nathan Shedroff write:

Anthropomorphism can mislead users and create unattainable expectations. Elements of anthropomorphism aren’t necessarily more efficient or necessarily easier to use. Social behavior may suit the way we think and feel, but such interfaces require more cognitive, social, and emotional overhead of their users. They’re much, much harder to build, as well. Finally, designers are social creatures themselves and must take care to avoid introducing their own cultural bias into their creations. These warnings lead us to the main lesson of this chapter.

Lesson: The more human the representation, the higher the expectations of human behavior.

In the MIT Press bestselling book Software Agents by Jeffrey Bradshaw, Don Norman wrote the following:

If the one aspect of people's attitudes about agents is fear over their capabilities and actions, the other is over-exaggerated expectations, triggered to a large extent because much more has promised than can be delivered. Why? Part of this is the natural enthusiasm of the researcher who sees far into the future and imagines a world of perfect and complete actions. Part of this is in the nature of people's tendency to false anthropomorphizing, seeing human attributes in any action that appears in the least intelligent. Speech recognition has this problem: develop a system that recognizes words of speech and people assume that the system has full language understanding, which is not at all the same thing. Have a system act as if it has its own goals and intelligence, and there is an expectation of full knowledge and understanding of human goals.

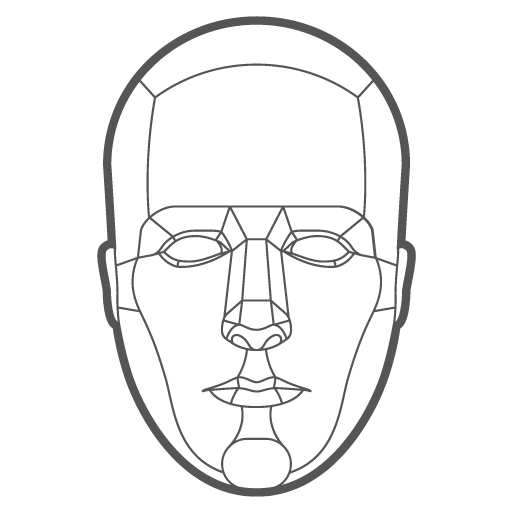

The problem is amplified by the natural tendency of researchers and manufacturers to show their agents in human form. You can imagine the advertisements: "Want to schedule a trip, the new MacroAgent System offers you Helena, your friendly agent, ready to do your bidding." As soon as we put a human face into the model, perhaps with reasonably appropriate dynamic facial expressions, carefully tuned speech characteristics, and human-like language interactions, we build upon natural expectations for human-like intelligence, understanding, and actions.

There are some who believe that it is wrong — immoral even — to offer artificial systems in the guise of human appearance, for to do so makes false promises. Some believe that the more human-like the appearance and interaction style of the agent, the more deceptive and misleading it becomes: personification suggests promises of performance that cannot be met. I believe that as long as there is no deception, there is no moral problem. Be warned that this is a controversial area. As a result, it would not be wise to present an agent in human-like structures without also offering a choice to those who would rather not have them. People will be more accepting of intelligent agents if their expectations are consistent with reality. This is achieved by presenting an appropriate conceptual model — a "system image" — that accurately depicts the capabilities and actions.

In section 12.7 of the popular HCI textbook, Designing the User Interface, the authors write:

The words and graphics in user interfaces can make important differences in people’s perceptions, emotional reactions, and motivations. Attributions of intelligence autonomy, free will, or knowledge to computers are appealing to some people, but to others such characterizations may be seen as deceptive, confusing, and misleading. The suggestion that computers can think, know, or understand may give users an erroneous model of how computers work and what the machines’ capacities are. Ultimately, the deception becomes apparent, and users may feel poorly treated.

Because users naturally expect that human-like program are “smarter” than they really are, designers and marketers should be cautious when creating human-like interfaces. Some interfaces such as chatbots or voice interfaces make it impossible to avoid personification. In these instances, designers and marketers should set clear expectations to avoid user dissatisfaction. For example, one popular communication program "Slack" comes with a chat program called "Slackbot". Before users use Slackbot they are told "Slackbot is pretty dumb, but it tries to be helpful." Similarly, it may be in the best interest of tech companies to refrain from marketing their products as "smart" or "intelligent" to avoid making the problem worse.

As a designer, I personally gravitate toward the principle 4 of calm technology as a means of avoiding some of the problems stated above. Principle 4 states that machines shouldn't act like humans, and humans shouldn't act like machines.